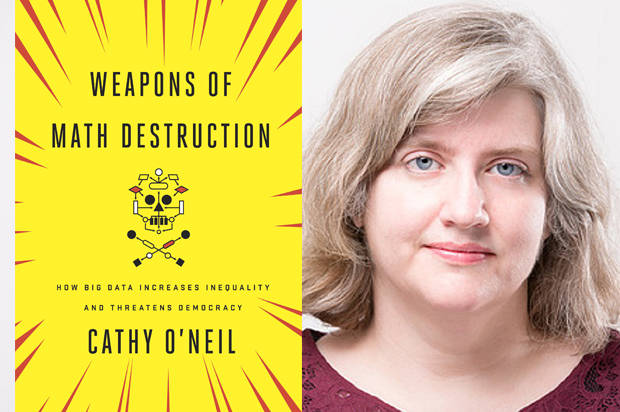

Weapons of Math Destruction – Cathy O’Neil

a.) summarizes the main takeaways of the book for classmates who have not had the opportunity to read it

Cathy O’Neil’s book “Weapons of Math Destruction” examines how mathematical models and algorithms are frequently employed to support and maintain systemic injustice and inequality. According to O’Neil, these “Weapons of Math Destruction” (WMDs) have serious adverse effects on people and society as a whole, especially on vulnerable and marginalized groups. The book offers numerous examples of WMDs being used in various fields, including hiring, advertising, education, and criminal justice. O’Neil, for instance, demonstrates how discriminatory and unfair predictive models may be when used to assess a teacher’s performance or a person’s creditworthiness.

The key lessons to be learned from the book include the necessity for increased accountability and openness in the design and use of algorithms, as well as the significance of adding moral considerations into algorithmic decision-making. The book also emphasizes the potential for algorithms to reinforce and magnify prejudices, highlighting the significance of diversity and inclusion in the technology sector.

In the Introduction to the book, the author sets the stage for her argument by describing how algorithms are increasingly being used to make decisions that have significant consequences for people’s lives, such as who gets hired or fired, who gets a loan or a mortgage, and who gets sent to prison. It notes that these algorithms are often proprietary and secret, meaning that the people affected by them have no way of knowing how they work or challenging their decisions.

There’s a popular saying that “men lie, women lie, but numbers don’t.” Because people tend to believe that ‘numbers don’t lie,’ many tend to cow into submission to anything that is based on numbers. However in “Weapons of Math Destruction” the author proves the use of warped mathematical and statistical models in algorithms to influence against ordinary people. These ‘algorithmic’ decisions tend to entrench existing inequalities by empowering the rich and powerful against the helpless mases. He debunks the notion of algorithmic neutrality with the argument that algorithms are based on data which are obtained from recorded behaviors and choices of people – most of which are flawed.

The author confirms Kate Crawford’s observation of the use of obscuring by mystification to conceal the truth from the people affected thereby. When confronted, computer scientists tend to present answers which suggest that the internal operations of the algorithms is ‘unknowable’ thereby slamming the door against all questioning. In line with the theory of political economy, the author observes that the effectiveness of algorithms is evaluated based on its ability to bring in the currency: i.e. political power to politicians, and money to business, but never on its effect on the affected people. Examples include the use of value-added modelling against teachers and scheduling software to optimize profits while exploiting desperate people and exacerbating working conditions of the worker and their social life. Political microtargeting which undermines democracy and provides politicians an avenue to be elusive by being ‘many things to many people.

b.) connects the book to our class conversations:

The book makes various connections to our class discussions on Feminist and Feminist Text Analysis. First, it draws attention to the ways in which algorithms can perpetuate systemic prejudices and discrimination, which can have serious adverse effects on disadvantaged and vulnerable communities, including women. Second, the book emphasizes the significance of including other viewpoints and voices in algorithmic decision-making processes, which is consistent with the intersectionality tenet of feminism. Finally, the book advocates for algorithmic decision-making to be more open and accountable, which is crucial for guaranteeing fairness and equity for all people, particularly women and other underrepresented groups.

c.) suggests what perspectives or new avenues of research and thought the book adds to the landscape of computational text analysis.

The book expands the field of computational text analysis by introducing a number of fresh viewpoints and lines of inquiry. One of the book’s major contributions is focusing light on the unfair application of mathematical models and algorithms in decision-making procedures like hiring, lending, and criminal justice, which can have a big impact on people’s lives. The book casts doubt on the idea of algorithmic neutrality by demonstrating how algorithms are built on inaccurate data derived from observed human actions and decisions, producing biased results that frequently worsen already-existing disparities.

Moreover, the impact of algorithmic decision-making on people, which reduces them to insignificant numbers and ignores their personal histories, psychological conditions, and interpersonal interactions, is highlighted in the book. It exposes the potential biases and inequities inherent in algorithmic judgments and emphasizes the necessity to address the ethical implications of using only algorithms to analyze human tales.

Since many algorithms employed in significant decision-making processes are private and secret, it can be challenging for those who are affected by these judgments to understand how they operate or to contest them. This is why the book examines the topic of transparency and accountability in algorithmic decision-making. The book highlights the need for greater accountability and transparency in the creation and usage of algorithms and urges readers to evaluate these tools’ effects on society with greater knowledge and critical thought. The use of computational text analysis in domains like education, where algorithms are employed to evaluate professors and lecturers, and the potential biases and limitations of such evaluations are also raised in the book. It promotes deeper study and reflection on the creation of moral and just algorithms that take into account the intricate social and cultural influences on text data and analysis.

d.) Own critical reflections

In the chapter 7- Sweating Bullets, the author highlights an important issue: the unfair use of past records and WMD (weapons of math destruction) to screen job candidates, resulting in the blacklisting of some and the disregard of many others. When we rely on an algorithmic product to analyze human stories, individuals become mere numbers. For instance, a hard-working teacher’s efforts for a day are reduced to 8 hours in the database. Similarly, the practice of Clepening operates on the same principle. The machine does not care about an individual’s mental stress, personal preferences, or relationships; it only considers the additional hours worked. The Cataphora software system operates in the same manner. During the 2008 recession, companies used the software’s decision to lay off employees who had small and dim circles on the chart. While I agree with most of the author’s statements, I remain optimistic that with advancements in AI, the damages caused by WMD can be reduced. Although I am unsure of how this can be achieved, the author has addressed many of the problems, and solutions may exist. This chapter provided an example of Tim Clifford’s teacher evaluation case, it reminded me of the Student Evaluation of Teaching that is conducted every semester at City Tech, as well as at all other CUNY undergraduate colleges. These evaluations allow students to provide feedback on their classes before the final exams to eliminate potential bias. The feedback is then gathered and analyzed to help instructors improve their teaching. Prior to the pandemic, City Tech used a paper version of the evaluations, where professors would receive forms for each class and ask students to fill them out in class. Instructors had to leave the room while students filled out the forms, and a student would then send the completed forms to the Assessment and Research office. However, this evaluation process put pressure on some instructors, particularly those who were adjunct professors or had not yet received tenure. Some instructors chose to not distribute the forms to students, filled out the forms and submitted forms themselves etc. Despite the potential for bias from students, I believe that the Student Evaluation of Teaching questions are reasonable and can help instructors improve their teaching methods. At the same time, I recognize that the evaluation process may not be entirely fair to instructors, and that algorithms used to evaluate teaching may also be subject to biases and inequalities. Therefore, it is crucial to prioritize the development of ethical and fair algorithms that account for the biases and inequalities present in our society.